Despite my best efforts over the last week or so, I think I might have to accept the fact I am getting sick.

I’ve been liking Inoreader enough I’ve taken advantage of their “Black Friday” offer, and grabbed myself a year of the Pro tier at a discount.

I’ve updated my Links page, adding RSS/feed data to every record I could, so Inoreader will pick up the feeds from my OPML file. I’ve also started adding all of the missing “follows” I have – starting with my old Feedly export, then I’ll add any missing from Aperture. So far I’ve added a bunch of blogs on various topics, and a new category for Scotland-focussed content.

Classifying sites has been one of the tricker aspects of this exercise so far! Some of my subscriptions date back 15+ years, and no site remains confined to a single topic for that long. For many I’ve been going with “what does this person do?” rather than what they’re currently writing about. Others are matched up with how I categorised them when I first subscribed (more or less). If I end up adding more categories I’ll reevaluate where some sites sit, but I’m keeping the number of categories as small as I can.

I’ve trimmed off a few dead sites, but – I confess – I’ve left just as many still on the list in the hope they spring back to life one day.

I’m giving Inoreader a quick go, based in part on Chris’s experiences using it as a Social Reader. The idea of hooking into IFTTT to create various posts on this blog based on what I read appeals to me. Inoreader allows subscribing to OPML feeds instead of importing a static file – allowing for dynamically managing my subscriptions. Chris manages this through his Following page, and I’m planning to do something similar with my Links page, although to get this working I’m going to have to go back through my list and add any RSS feed URLs to the entry.

I should take the time to get all my “followings” back in sync during the exercise. I dropped Feedly last year, and I’ve never quite finished porting everything I had there to Aperture/Monocle, or to the Links page. I’ve not synced everything I’ve added to Aperture back to the Links page either. So much housekeeping to catch up on.

On a bit of a whim, I walked the 4.6KM to work today. The majority of the route is uphill. My feet and knees hate me right now.

Even though I’m all for fulfilling civic duties, after this week, I never want to be on a jury again. I can’t imagine the toll it takes on those in the legal profession who are exposed to these matters all the time.

That’s my service over with, although I think I’m going to need to take a few days to clear my head.

Doh, guess I should probably consider looking for a new VPN provider.

A few photos from today’s walk:

“Dark mode” for this site has been one of those things on my todo list for a while. The in-development theme I’m working on has been built from the ground up to support it through the use of media queries and CSS custom properties — but I hadn’t actually implemented it. After a nudge from reading this post by Jeremy I’ve finally implemented something to try out:

For now I’m borrowing Jeremy’s colours, but I’m planning to tweak these as the design evolves.

I’m starting to pull together a theme based on the changes I was thinking about a few days ago. It’s nothing “earth-shattering” or revolutionary, but it fits where my mindset is right now.

I need to think carefully about how I handle Post Kinds, like Bookmarks and Likes… these have had such divergent ways of formatting over the years that there’s probably no one-size-fits-all approach I can take. So far I’ve managed to keep everything IndieWeb-compatible too, with h-feed, h-entry, h-card, and other building-block microformats working more-or-less as they do now (as far as I’ve tested with X-Ray, Parse This, Indiewebify, etc).

Layout is being handled by Flexbox for the moment. This article has continued to be invaluable. I could/should switch to CSS Grid for the final version, but I haven’t yet. So far only the content has been worked on. I still need to figure out the header/footer/secondary information.

If I can get a good run at things, I should have the theme finished some time in November. October is unlikely, as my evenings are fully-booked until at least the 19th.

Ericlaw has a nice write up about the history of the XSS filter. Unfortunately it doesn’t help me fix my ongoing woes (which I’m nearly convinced must be a false-positive), but it does give a few insights and context to the feature.

Apologies if you got/get a webmention from my dev site – I just refreshed it with some more recent content, so I could see how things were looking with a wider variety of posts.

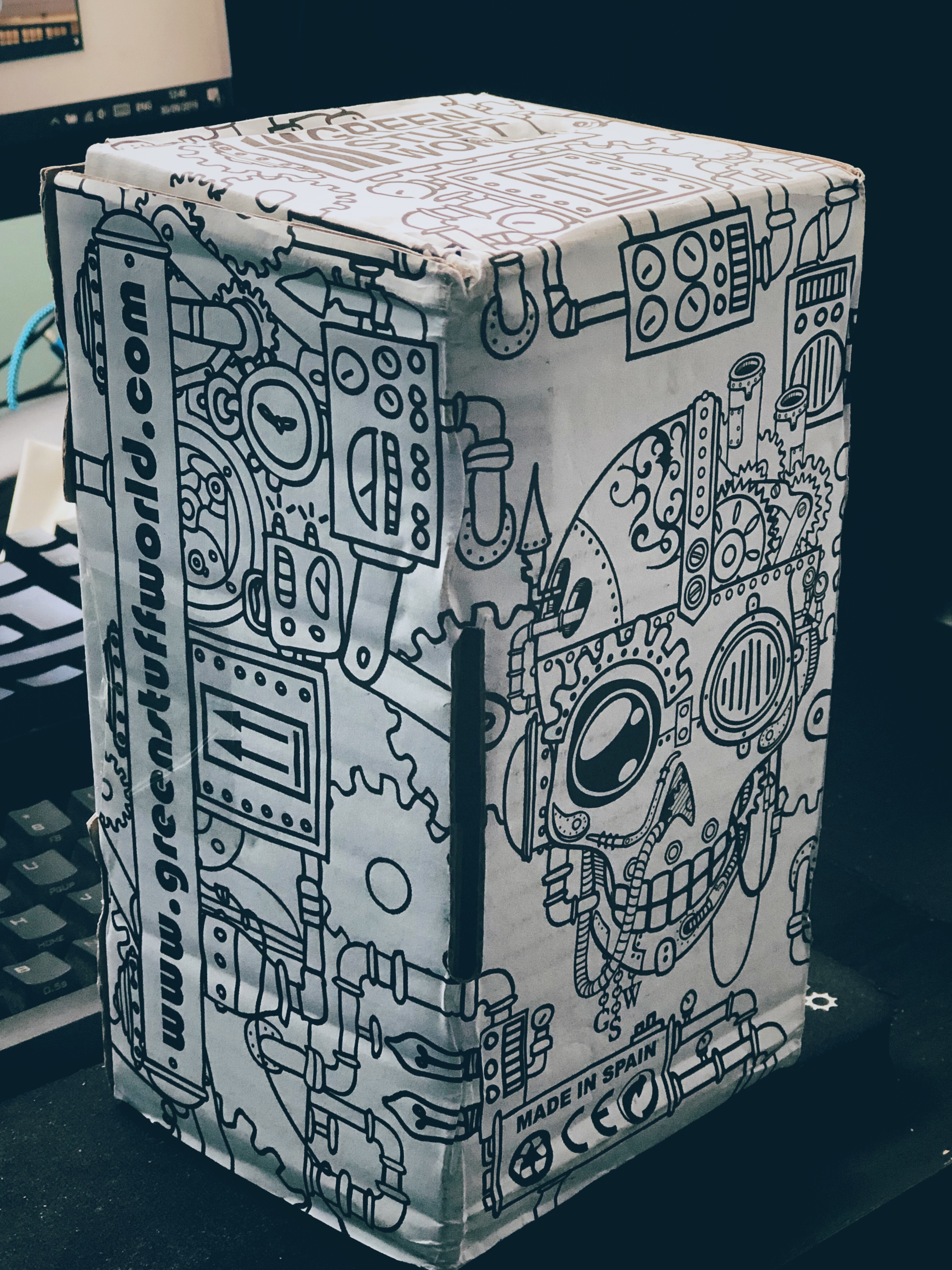

I really like this new shipping packaging from Green Stuff World! Very striking, and subtly “on brand”.

Today is off to a great start already. The main corporate network seems to be down. I’d connect to another network and remote in… but I’ve forgotten my work phone, which has the security token app required for the VPN. Classic Monday.

It’s still before 10am, and already I’ve had to put on my most soothing classical music playlist, *and* stress-ate a small cake-based treat. Some people are here to test us.

One reason I prefer taking the train when travelling within Scotland is because it can be almost insufferably pretty

Disappointed and a bit angry to find someone had drawn swastikas and celtic cross in the morning condesation on my bus stop this morning. Even though it wasn’t a permenant defacement and would’ve vanished in a couple of hours, I scrubbed that shit out as soon as I spotted it.

I wouldn’t have had time to see it if I hadn’t missed my normal bus and had to wait for the next one, so now I’m going to be looking warily at the people getting on the same time as me.

Half the people in my office have been cited for jury duty in the last 3-4 months, and I guess today was my turn!

I generated 1.1TB of string data for a project, overnight. It’s just one big text file on a disk. Now I just have to grep through it to find the particular patterns I need… that 1.1TB will probably come down to 500-600GB by the end of it, but I can see the pattern-matching process taking the rest of the weekend…

Python and command-line utilities have been super useful at generating this data, and definitely helped the process along. As a reminder to myself, these are the commands I’m using to “post-process” the data:

Look for lines in input.csv which don’t match this pattern, and echo them to output.csv:

$ grep -vE "([A-K]{3}),\1" input.csv > output.csvSplit output.csv into files 800MB in size, called data_n, where n is an 8-digit incremental number (e.g. data_00000001):

$ split -a 8 -d -b 800M output.csv data_For each data file in the directory, give it the .csv extension:

$ for f in data*; mv "$f" "$f.csv"; done